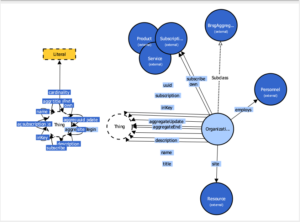

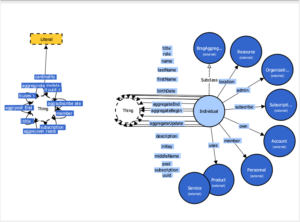

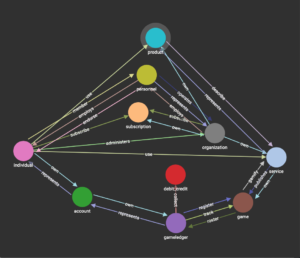

Temporal Linked Data® (TLD) and TigerGraph’s Labeled Property Graph third-generation graph database have different purposes and so the schemas have slightly different structures. In this TigerGraph schema image you can see that all aggregate vertices have a corresponding change vertex. The attributes in the debit_credit_change vertex are also shown for example sake.

Temporal Linked Data® Agile Gamification example in TigerGraph (Image credit: TigerGraph Graph Studio)

Where TLD keeps changes sparse (only what has changed) as an ordered collection with the aggregate (vertex) to which it belongs, we have modeled our TigerGraph schema to have a “changes” edge between the aggregate vertex and its’ changes vertices. Each change that is published to TigerGraph results in a new edge and change vertex associated with the aggregate, where the aggregate simply reflects the current state.

TigerGraph is built to perform deep analysis across many “hops” (physical edges between vertices). Writing graph queries against this schema with ten aggregate vertices and their corresponding 10 change vertices is light work for TigerGraph. Example analytic queries might include:

For a game, which individual has the highest score? This query does not require any temporal data as the debit_credit aggregate keeps a running tally. There are five hops between: game, gameledger, debit_credit, account, and individual.

For a game, which individual has received the most positive comments from one’s colleagues? This query requires the temporal data in debit_credit_change but is easily satisfied. There are six hops to answer this query.

For a game, which individual has been the most helpful to their colleagues? Given the autogenerated events and specific collegial input, this query is easily satisfied with the same six hops.

There are nearly two dozen analytic queries that could be discussed here and all but two of them require temporal data. Something to think about.

All two dozen analytic queries are available for realtime display on an operational dashboard as they perform efficiently and execute in a few milliseconds (many-hop analytic queries executed as often as you like for full participation in your operational system—that’s HTAP by way of TLD).

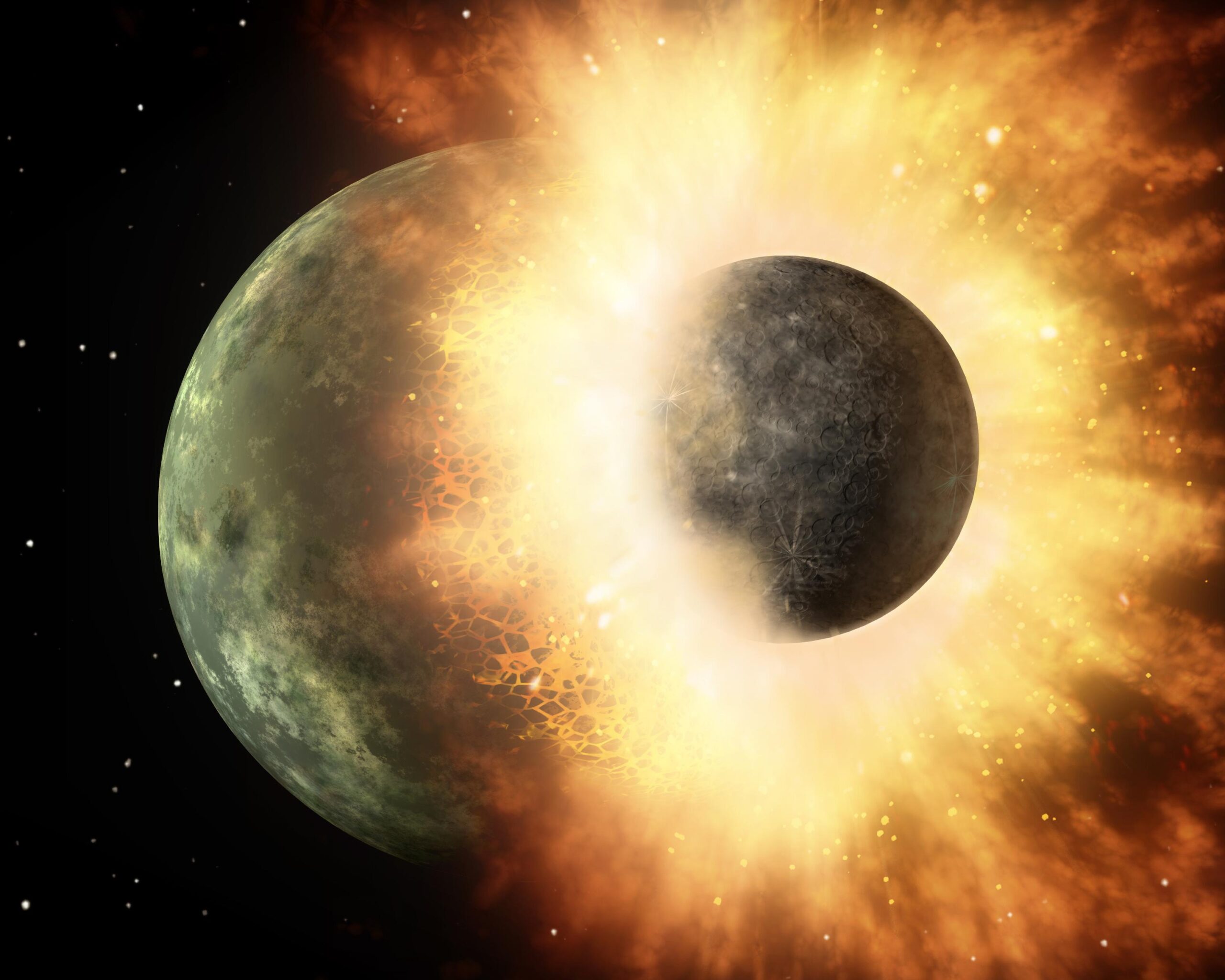

The Earth’s state as planet Theia collides to form our moon—temporal data tells a very interesting story. (Image credit: NASA)

Next we will look at the value of temporal data, both transactionally and analytically.