Ideas That Scale, HTAP by way of TLD

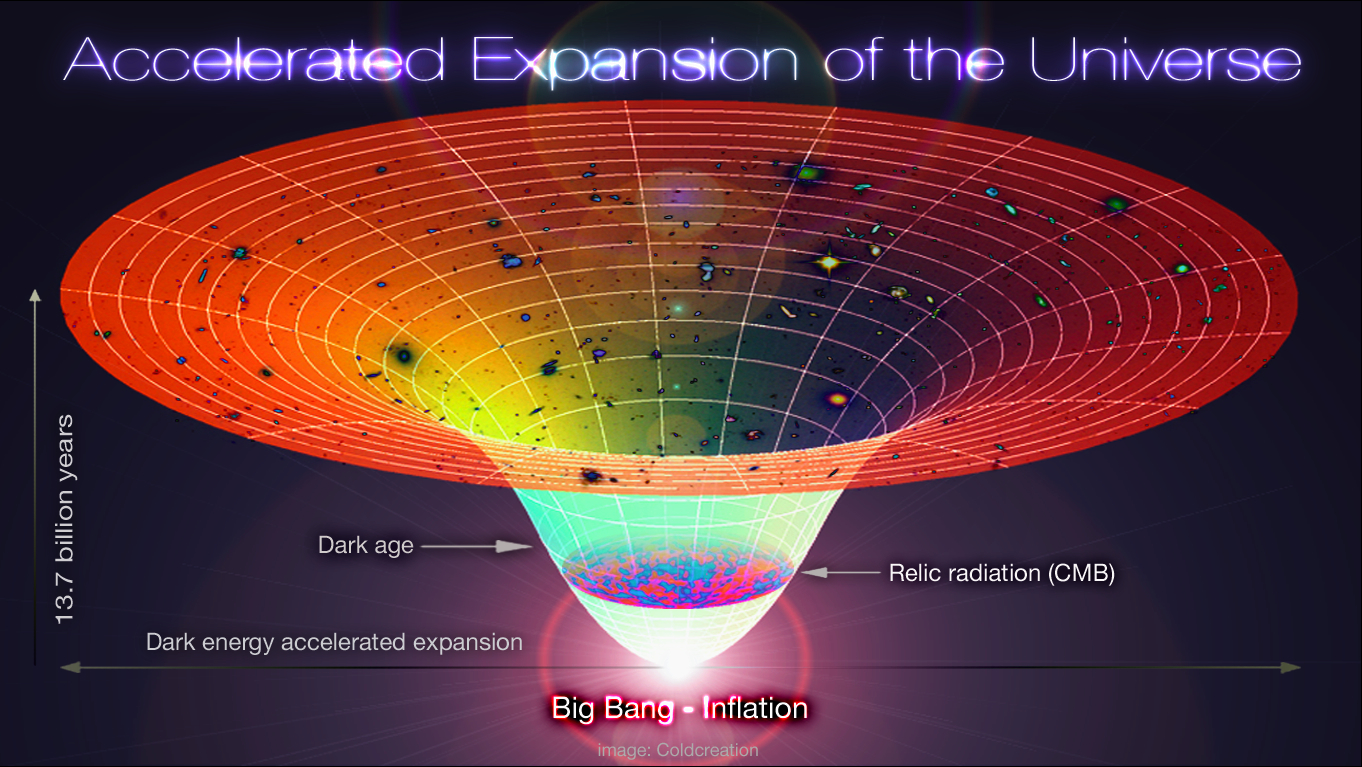

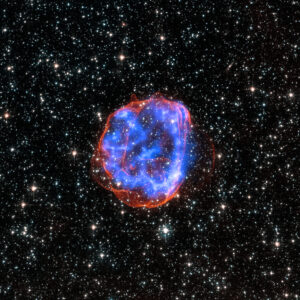

Our generation has the unique privilege of being able to see back to within a few million years of the beginning of time. How far back one is able to look depends on the technology that is used, but our Universe’s past is there to be seen. Temporal Linked Data® (TLD) naturally keeps a record of enterprise data changes to enable another kind of time travel, the story of enterprise data, for both operational and analytic purposes.

This series of weblogs introduces TLD as a transactional, low-code, enterprise class compute and temporal data cluster that naturally projects all writes to a world class big data graph analytics platform such as: 1) third-generation graph database for analysis, machine learning, and explainable artificial intelligence by way of TigerGraph, and / or 2) enterprise knowledge graph, ML, and AI by way of ReactiveCore.

For a high-level understanding we will briefly explore these subjects.

- Hybrid Transactional / Analytic Processing (HTAP) by way of TLD

- Explicit Connections over Implicit Relationships

- What’s New? Nothing. Everything.

- Well Bounded Business Semantics

For a more concrete understanding we will use a gamification example, described as follows.

- A Gamification Example for HTAP / TLD

- Agile Gamification

- Agile Gamification Model

- Augmenting Operational Data with Gamification

- Auto-Generated Backend

- Temporal Linked Data® as TigerGraph Schema with Temporality

- The Value of Temporal Data, Transactionally and Analytically

- Reasonable Conclusions about HTAP by way of TLD

The technological innovation represented by the BEAM ecosystem and third-generation graph database allow for the possibility of building enterprise systems that simultaneously account for operational and analytic concerns. We look forward to taking this fast-data, big-data, HTAP, Temporal Linked Data® journey with you.